I’ve been using Google Experiments (GE) pretty heavily in the last year and the method it uses to send traffic to landing page alternatives has always confused me a little. So yesterday I did some searching and it turns out that GE uses a “multi-armed bandit” method a splitting up traffic between alternatives. Basically this means that each experiment starts with a short evaluation period when traffic really is split up 50/50 (or whatever percentage you choose). After the evaluation period that the conversion rates of both alternatives are measured and more traffic is sent to the higher converting page. This evaluation is carried out a few times a day and the traffic split is adjusted accordingly. The reasoning behind this is supposedly two fold:

1. It minimizes the effect on overall conversion for the period of the experiment if one of your alternatives is particularly horrible.

2. It can lead to a much faster 0.95 confidence result especially when one alternative performs much better than the other.

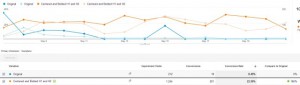

With low traffic pages (say 100 visitors or less per day) if one of the alternatives happens to have a really good first day or two then you can end up with 90% of the traffic going to that and 10% going to the other. And these really good and bad days DO happen, it’s the nature of random variation and small sample sizes. I often see pages that have average long term conversion rates of 15% having 2% or even 0% conversion days. In the figure above you can the light blue line shows the conversion rate of one of my landing pages over a 30 day period. The rate varies quite randomly between less than 5% to more than 25%.

So imagine the situation where the initially poorly performing alternative is getting just 10 or so visits per day and normally has conversion rates of <10%. It can easily be DAYS before it has another conversion. And each day Google is evaluating that performance and sending the the page LESS traffic. Often this is just 1-2 visitors a day. So failure is basically assured.

[caption id="attachment_243" align="aligncenter" width="300"] Figure 2 : Traffic Distribution During Experiment[/caption]

Figure 2 : Traffic Distribution During Experiment[/caption]

The net result of this is that the last week or so of an experiment often virtually ALL of the traffic is being sent to one alternative if it happens to perform better in the first few days of an experiment. And I’ve now seen this a number of times. I haven’t done the math on it but looking at my results I’ve had experiments conclude with a winning result getting 10-20x the traffic of the failing result. You can see and example of this above. The winning alternative (the orange lines) showed a great conversion rate in the first few days which resulted in less and less traffic being sent the alternative (the pale blue line). In fact, for the last 10 days of the experiment the poorer performing alternative received almost no traffic. At the end of the experiment the winning result got 1244 visits for 281 conversions and the alternative got just 212 visits with 18 conversions. To my mind 212 visits just isn’t enough to be statistically significant and certainly not enough to declare a conclusive winner.

It turns out that there’s a an advanced setting buried in the Google Experiments advanced settings called “Distribute traffic evenly across all variations”. This is OFF by default and needs to be turned on to ensure that the experiment actually uses the 50/50 traffic split (or whatever % you choose). My feeling is that it’s hazardous accept the result of just one GE that uses the multi-armed bandit method. Especially for low traffic landing pages. Multiple experiments are required. Of course this should be true of any A/B test. I also think that if you’re evaluating low traffic pages then you should conduct your experiments using the true 50/50 traffic split and compare those with the multi armed bandit method.

As an addendum here’s someone who takes a contrary view to the rosy view presented by Google in the page I linked to above. The folks on Visual Website Optimizer do not think multi-armed bandit is better than regular A/B testing.